When considering the sheer complexity of the brain, an interesting quote by Emerson Pugh always comes to mind:

“If the human brain were so simple that we could understand it, we would be so simple that we couldn’t.”

It’s an apt illustration of the challenge neuroscientists faces in pursuit of understanding the brain, as we strive to answer questions like “where does consciousness come from?” or “why do we dream?”

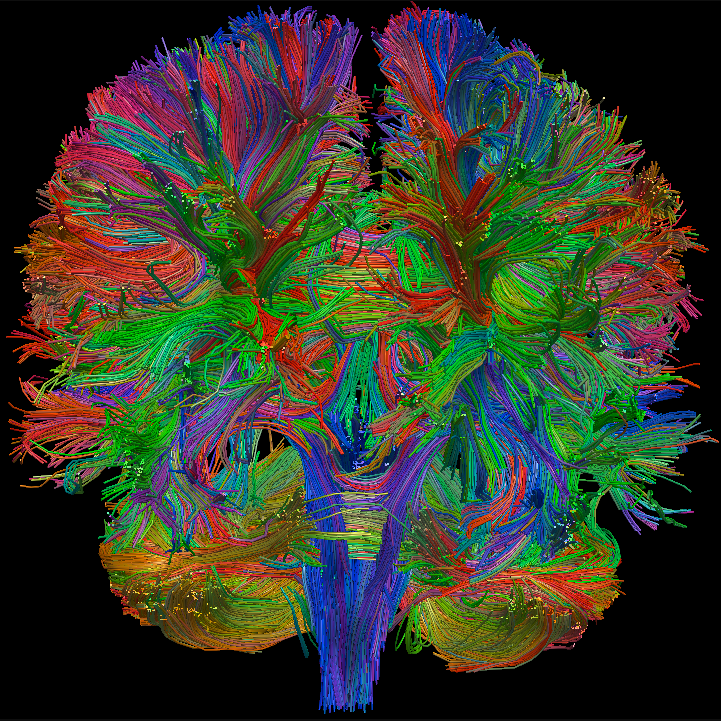

Some of this complexity stems from the structure of the brain itself. An average of 86 billion neurons [1] and 100 to 1000 trillion synapses [2] form the circuitry. 163 brain regions interacting to form 111 functional networks serve the basis for cognition and behavior [3]. More impressively, the brain is constantly changing; whether it be as a result of synaptic pruning due to aging, synaptic plasticity in response to learning, or chemical imbalances associated with psychiatric illness. Modeling the brain to capture this level of intricacy from the molecular level to that of large-scale networks is no small feat. Despite the ability to sample neural activity at a fraction of a millisecond or visualize interacting brain regions at the resolution of millimeters, how do we model this information and use it to explain phenomena we observe on a daily basis?

Figure 1: Diffusion Tensor Imaging (DTI) revealing white matter connections between various brain regions. From: Medical Daily.

Artificial Intelligence to explain…human intelligence?

Artificial intelligence (A.I.) has been making massive headlines in recent years. It was only 23 years ago IBM’s Deep Blue defeated Garry Kasparov at chess, the world campion at the time. Now, A.I. applications range from autonomous driving, speech recognition, and medical diagnosis. A.I. refers to the intelligent execution of a task. One application involves creating models based on information, such as meteorological (weather) information or pictures of animals. In turn, the type of information you provide your model with determines the type of predictions it makes, like next week’s temperature or distinguishing dogs from cats. One of the defining paradigm shifts separating earlier iterations of A.I. to the ones performing impressive feats today is their ability to learn. Previously, knowledge would be pre-programmed into these models, preventing them for generating their own abstractions or drawing novel solutions. The rise of machine learning has provided models with the necessary inductive capabilities to learn from the data they’re provided, allowing them to improve. Imagine if you were to provide a model with fMRI data from thousands of patients with a particular psychiatric illness over a period of time; hypothetically, it is possible that a model could learn of early predictors for the illness from seemingly healthy patients. In practice, its not that simple (a discussion for another post)

Figure 2: Left - IBM’s Deep Blue playing Garry Kasparov. Right – Google Assistant and Apple’s Siri, famous speech recognition software. Images from: Scientific American and The Verge.

In fact, a major hallmark of human intelligence is the capability to utilize experience in adaptively shaping behavior. We are always learning, with our brain constantly changing as a result. Unsurprisingly, drawing inspiration from our own neural mechanisms improves the capabilities of these machine learning models (our brain IS considered the most advanced and efficient supercomputer ever built for a reason). However, the ability to capture such a fundamental aspect of the brain begs an important question: would a model that incorporates a sufficient number of brain-like mechanisms allow us to better approximate a model of the brain itself?

A Less Artificial A.I. - Neural networks

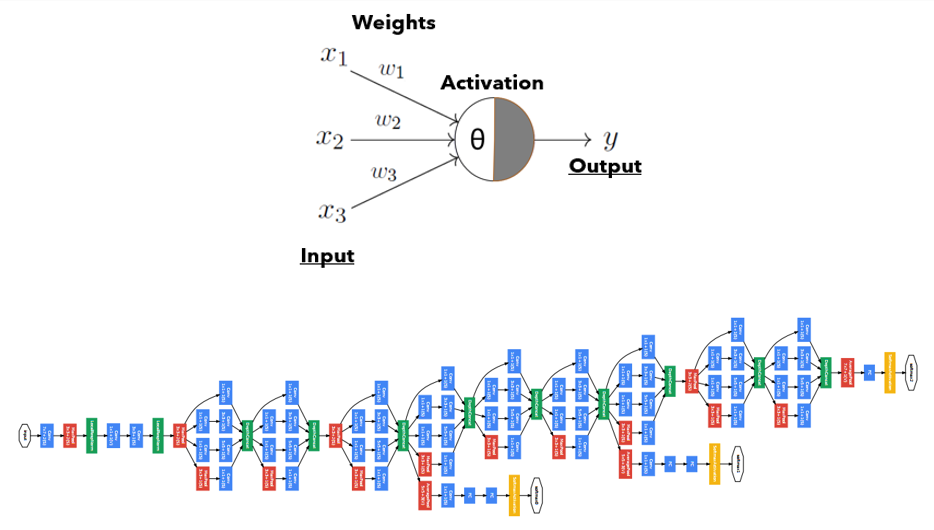

Neural networks refer to a class of models that are inspired by our own biological neural networks. They serve as the basis for convolutional neural networks and deep neural networks, complex models underlying modern applications of A.I. For example: perceptrons, which are considered the first neural networks and the basis for subsequent models, consists of an artificial neuron or a “node” that takes in several weighted inputs (weighting determines the relative importance of an input), sums these inputs, and produces an output based on some function. The biological brain equivalent would be how a neuron recieves many pre-synaptic inputs of varying magnitudes and would fire based on the summation of these signals.

Figure 3: Top – A single perceptron. Bottom – A deep convolutional neural network (GoogLeNet). Adapted from Towards Data Science and Szegedy et. al, 2015 [4]

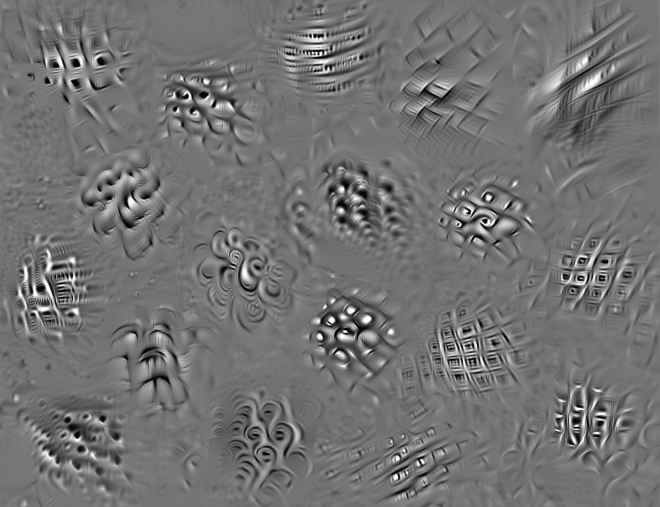

Perceptrons capture a basic level of neural processing. More complex neural networks capture additional brain dynamics to different extents. Convolutional neural networks draw inspiration from the visual cortex and deep neural networks mimic the hierarchy of our brain regions. While it may not be necessary to have a purely brain-inspired neural network to model a specific facet of brain activity biologically inspired neural mechanisms continue to show their resourcefulness in producing good models. For example, in an advanced application of neural networks, Bashivan, Kar and DiCarlo (2019) [5] took a neural network that utilized knowledge of EEG activity in response to specific stimuli in order to generate synthetic stimuli. These synthetic stimuli were capable of driving activity 68% above those observed on the images it trained upon. Furthermore, it was also capable of generating stimuli that elicited activity in neurons from specific sites, while inactivating activity in other neural sites. These findings are an example of how neural networks are capable of capturing a fundamental aspect of brain function and the dynamics behind what drives its activity.

Figure 4: An example of the synthetic stimuli generated by a neural network used to drive EEG activity at specific neural sites in the V4 of macaque monkeys. From Bashivan et. al., 2019

Neural networks may not tell us why we dream. But our knowledge of the brain has inspired impressive advances in the field of A.I., and in turn, shows potential as an interpretative tool for understanding the brain. Continuing to pursue this mutually beneficial relationship can yield important insights into both neuroscience and A.I. As to how far off we are from an actual model of the brain, one that we can dissect the same way we would a biological one…I guess you’ll found out in the next post.